The Knolwedge of RAG

This is a my study note of base RAG. And the required knowledge like Transformer, BERT, BART and DPR are also mentioned.

Introduction

Pre-trained language models’ ability to access and precisely manipulate knowledge is still limited, and hence on knowledge-intensive tasks, their performance lags behind task-specific architectures. Additionally, providing provenance for their decisions and updating their world knowledge remain open research problems.

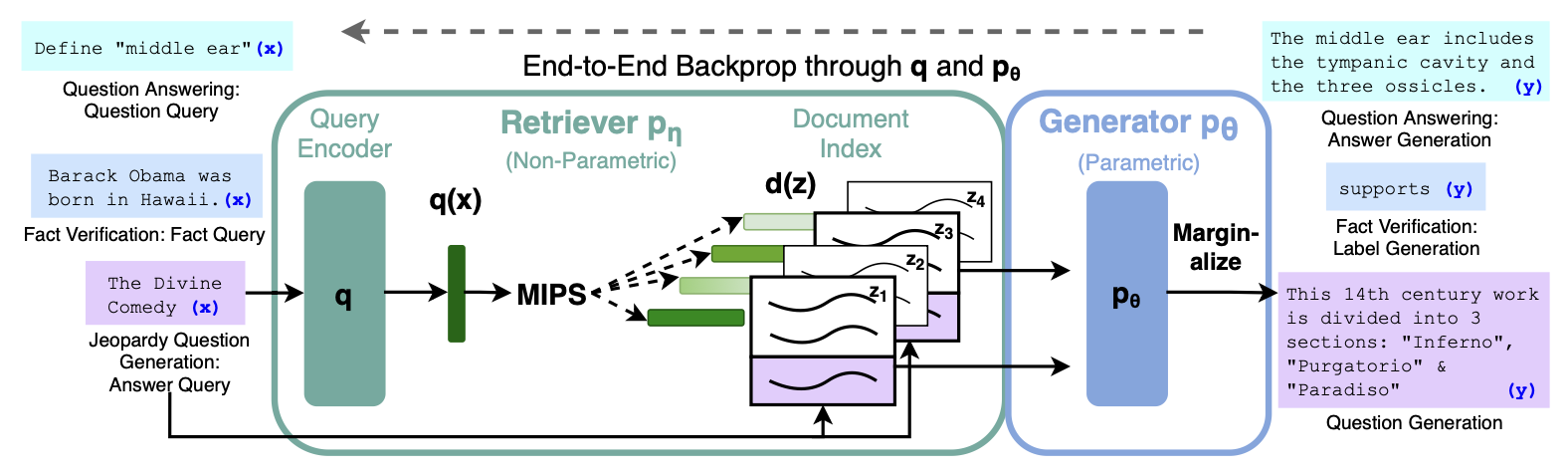

RAG combines pre-trained parametric and non-parametric memory for language generation, where the parametric memory is a pre-trained seq2seq model and the non-parametric memory is a dense vector index of Wikipedia, accessed with a pre-trained neural retriever

They build RAG models where the parametric memory is a pre-trained seq2seq transformer, and the non-parametric memory is a dense vector index of Wikipedia, accessed with a pre-trained neural retriever. They combine these components in a probabilistic model trained end-to-end

The retriever (Dense Passage Retriever, henceforth DPR) provides latent documents conditioned on the input, and the seq2seq model (BART) then conditions on these latent documents together with the input to generate the output.

Two Components of RAG

RAG models leverage two components:

- Retriever:

A retriever \(p_{\eta}(z \mid x)\) with parameters \(\eta\) that returns (top-K truncated) distribution over text passages given the input \(x\)

- Generator:

A generator \(p_{\theta}(y_i \mid x,z,y_{1:i-1})\) parameterized by \(\theta\) that generates a current token based on a context of previous \(i - 1\), the original input \(x\), and the retrieved text passages \(z\)

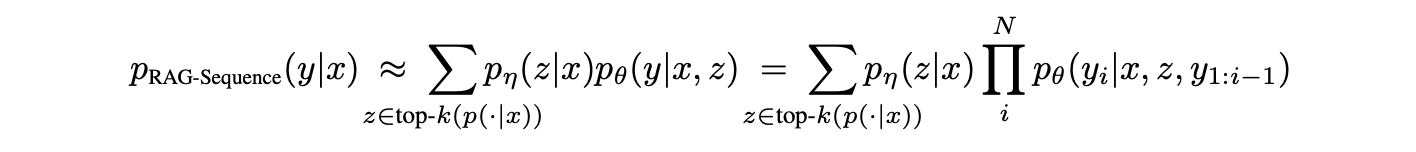

Two Different Models

There are two different RAG models that marginalize over the latent documents in different ways. To remind you what is marginalization, let’s consider the following example:

Suppose you’re interested in how a person’s exercise habits influence their health. You can write this as \(P(\text{health} \mid \text{exercise})\). However, people’s health is also affected by their age, so you actually measure \(P(\text{health}, \text{age} \mid \text{exercise})\) ,which is the joint probability of health and age given exercise habits. To focus on just the impact of exercise on health, marginalization allows you to sum over all possible ages:

\[P(\text{health} \mid \text{exercise}) = \sum_{age} P(\text{health}, \text{age} \mid \text{exercise})\]Let’s come back to RAG models. The difference between the two models is in how they marginalize over the latent documents:

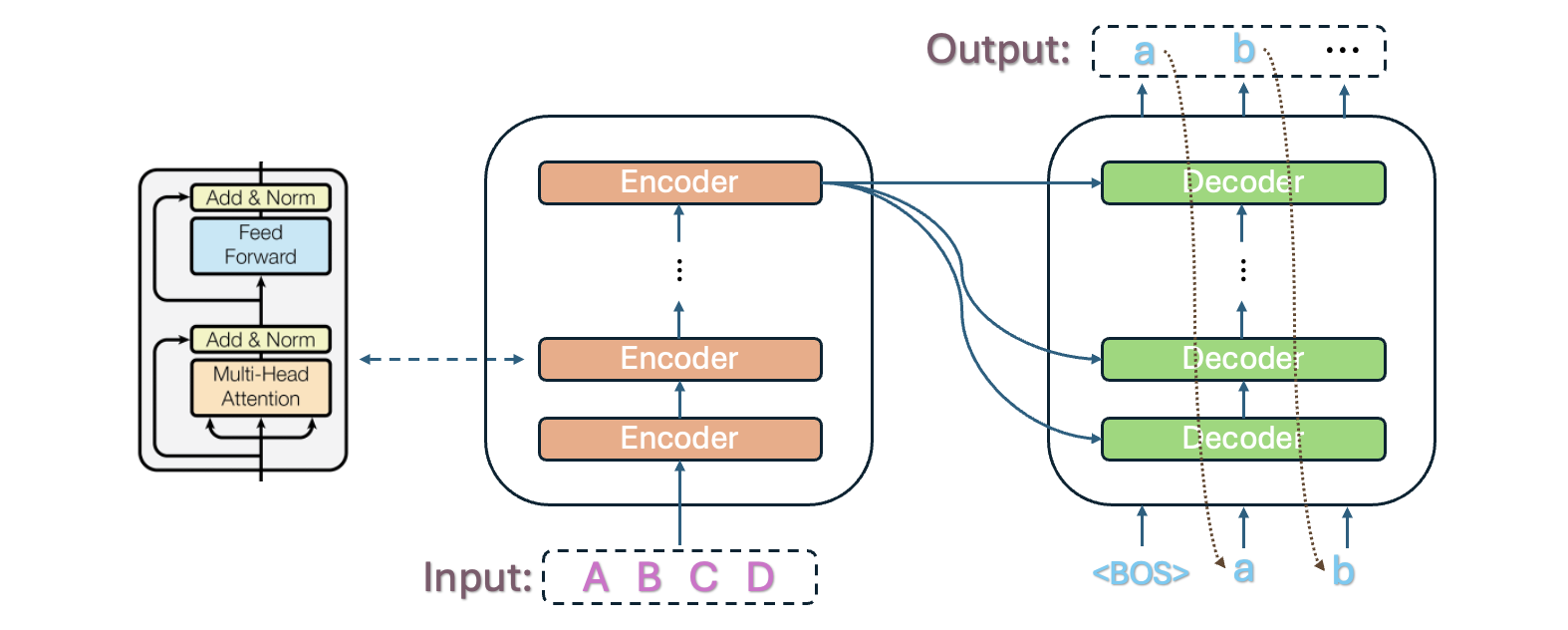

- RAG-sequence: The RAG-Sequence model uses the same retrieved documents to generatethe complete sequence. This model generates an entire answer based on a single document retrieved from the top-K documents. It uses marginalization over the top K documents at the end, treating the retrieved document as a single latent variable to generate the full sequence of the answer.

- RAG-token: The RAG-Token model draws a different latent document for each target token and marginalizes accordingly. This model generates each token of the answer by selecting a different document from the top K retrieved documents for every token. It marginalizes at each token generation step, allowing content from multiple documents to be incorporated into the answer, creating a more diverse and flexible output.

**Imagine you ask a question and want to get a answer from an intelligent assistant. The intelligent assistant will find several relevant books from a library and then generate the entire answer based on the contents of those books. During this process, it will keep referring to the same book until the end of the answer. After that, it will compare the answers and select the best one. The RAG-Sequence model is like this helper, it always relies on the same book (one document) when generating the answer, and then compares the answers generated from multiple documents to give the final answer. But the intelligent assistant of RAG-Token is different. Instead of using only one book, the intelligent assistant picks a word from a different book each time it wants to generate a word to combine the answer. For example, it might use the first book to generate the first word, and then the second book to generate the next word. Finally, it combines the words it picks from different books each time to get a complete answer. The RAG-Token model can refer to different books (documents) when generating each word, so each word can potentially originate from a different place. **

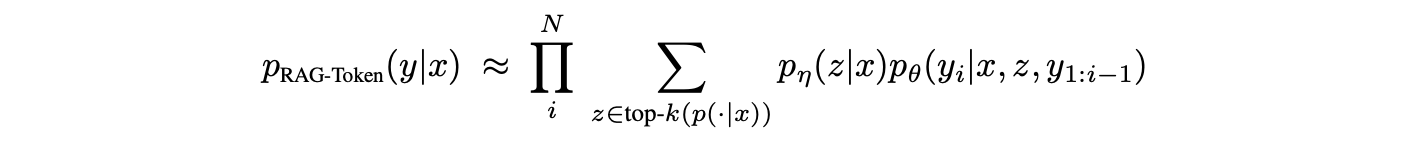

Transformer, BERT, and BART

Transformer is a type of neural network architecture that is particularly well-suited for natural language processing tasks. It uses attention mechanisms to capture the relationships between words in a sentence and uses a self-attention mechanism to encode the entire sentence into a fixed-size vector representation. BERT and BART are both transformer-based models that are pre-trained on large corpora of text and fine-tuned on a specific task. BERT is a bidirectional transformer that can be fine-tuned for a variety of tasks, including question answering, text classification, and token classification. BART is a sequence-to-sequence transformer that is specifically designed for text-to-text generation tasks, such as summarization, translation, and question answering.

Transformer consists of two parts: the encoder and the decoder. The encoder processes the input sequence and produces a fixed-size representation of the entire sequence. The decoder processes the encoded representation and produces the output sequence. The attention mechanism allows the decoder to focus on different parts of the input sequence based on the encoded representation.

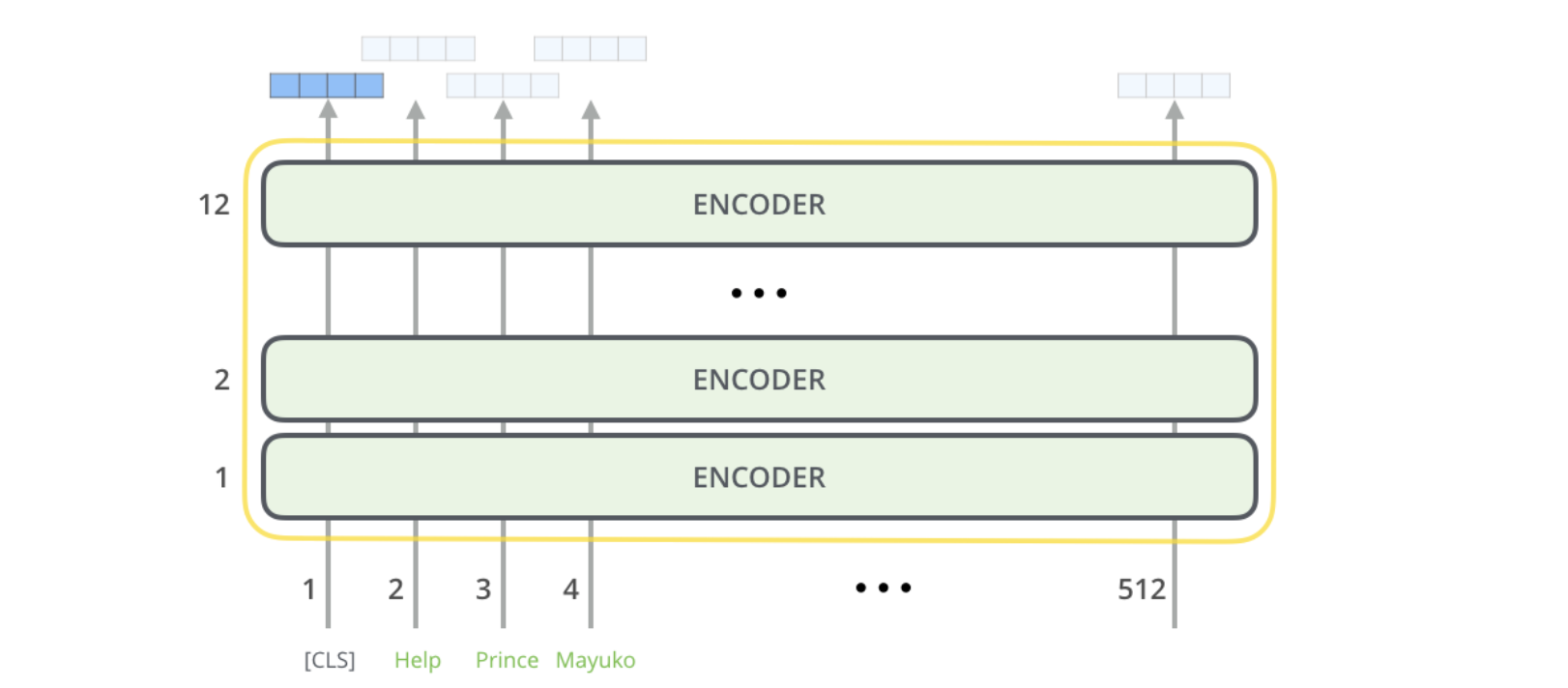

BERT’s model architecture is a multi-layer bidirectional Transformer encoder

In the base BERT model, there are 12 layers of encoder (\(L=12\)), 12 Attention in each layer (\(A=12\)), and the hidden size, which is also the dimension of the word vector, is 768 (\(H=768\)). The total parameters of base BERT is 110 million. In the large BERT model, there are 24 layers of encoder (\(L=24\)), 16 Attention in each layer (\(A=16\)), and the dimension of the word vector is 1024 (\(H=1024\)). The total parameters of large BERT is 340 million. And in all cases, the feed-forward/filter size is 4 times the hidden size.

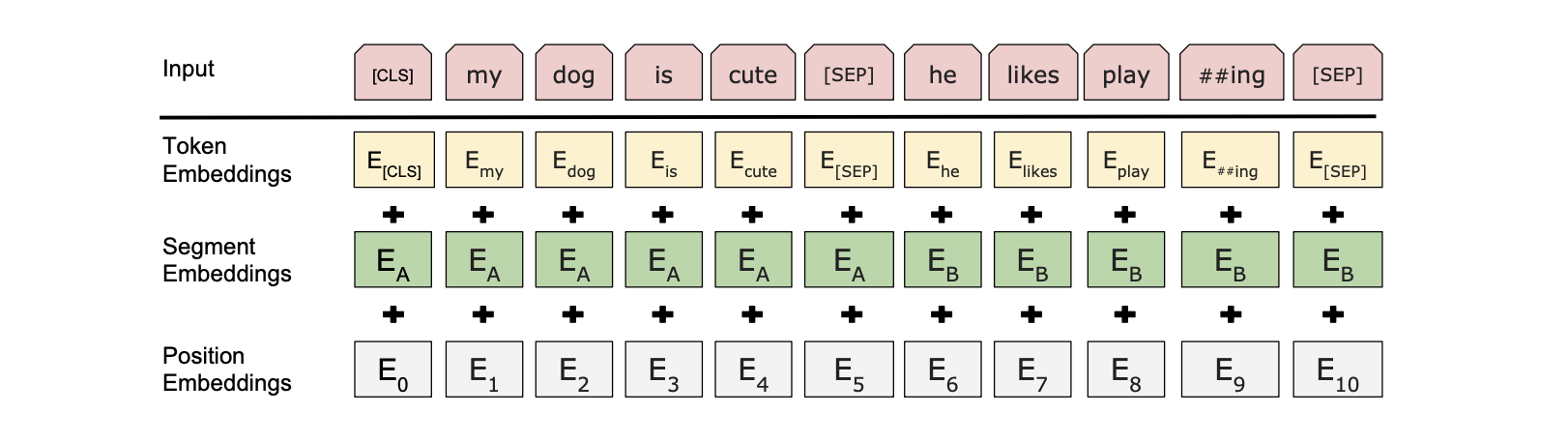

The input will firstly be passed through the embedding layer, which is a learned representation of each word in the vocabulary. The embedding layer in BERT consists of Token Embedding, Positional Embedding, and Segment Embedding.

Then it will be passed through the encoder layers, the number of layers is 12 in the base model and 24 in the large model. Each layer applies a multi-head attention layer, and passes its results through a fully connected feed-forward network, and then hands it off to the next encoder.

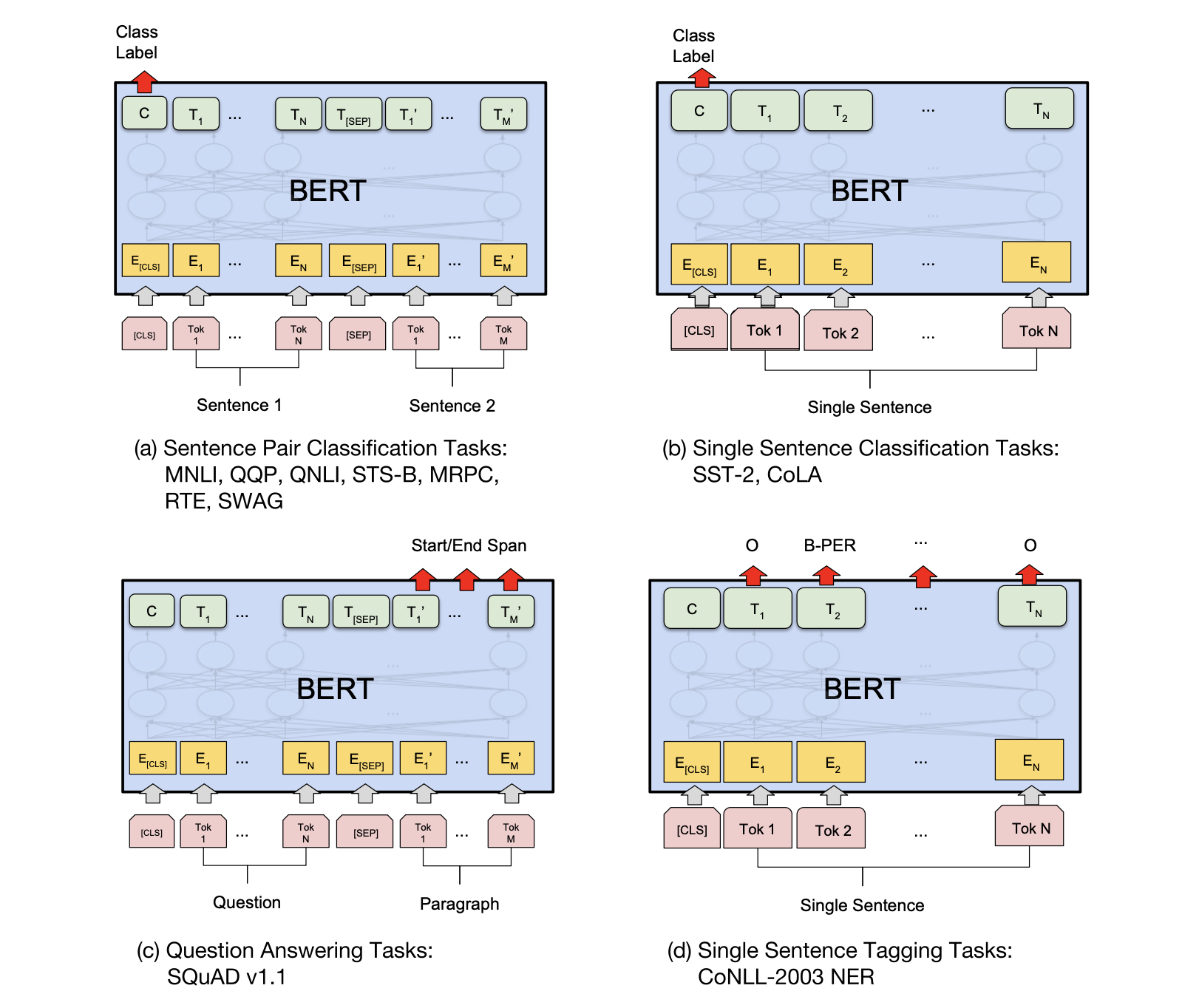

And we can get the output sequence from the final encoder layer. In different tasks, the output sequence will be processed differently.

- Link to the paper of BERT: https://arxiv.org/abs/1810.04805

- For more information about BERT: https://jalammar.github.io/illustrated-bert/

How BERT is a bidirectional?

It is bidirectional because it uses context from both sides of the current word (instead of e.g. using just the previous few words it uses the whole sequence). The BERT pre-training process consists of two parts: 1. Mask LM; 2. NSP. The bidirectional structure is reflected in Mask LM. For example: Tom likes to study [MASK] Learning. This sentence is input into the model, and [MASK] combines the information of the left and right contexts through attention, which reflects the two-way. Attention is two-way, but GPT achieves one-way through attention mask, that is: let [MASK] not see the words of learning, and only see the above Tom likes to study.

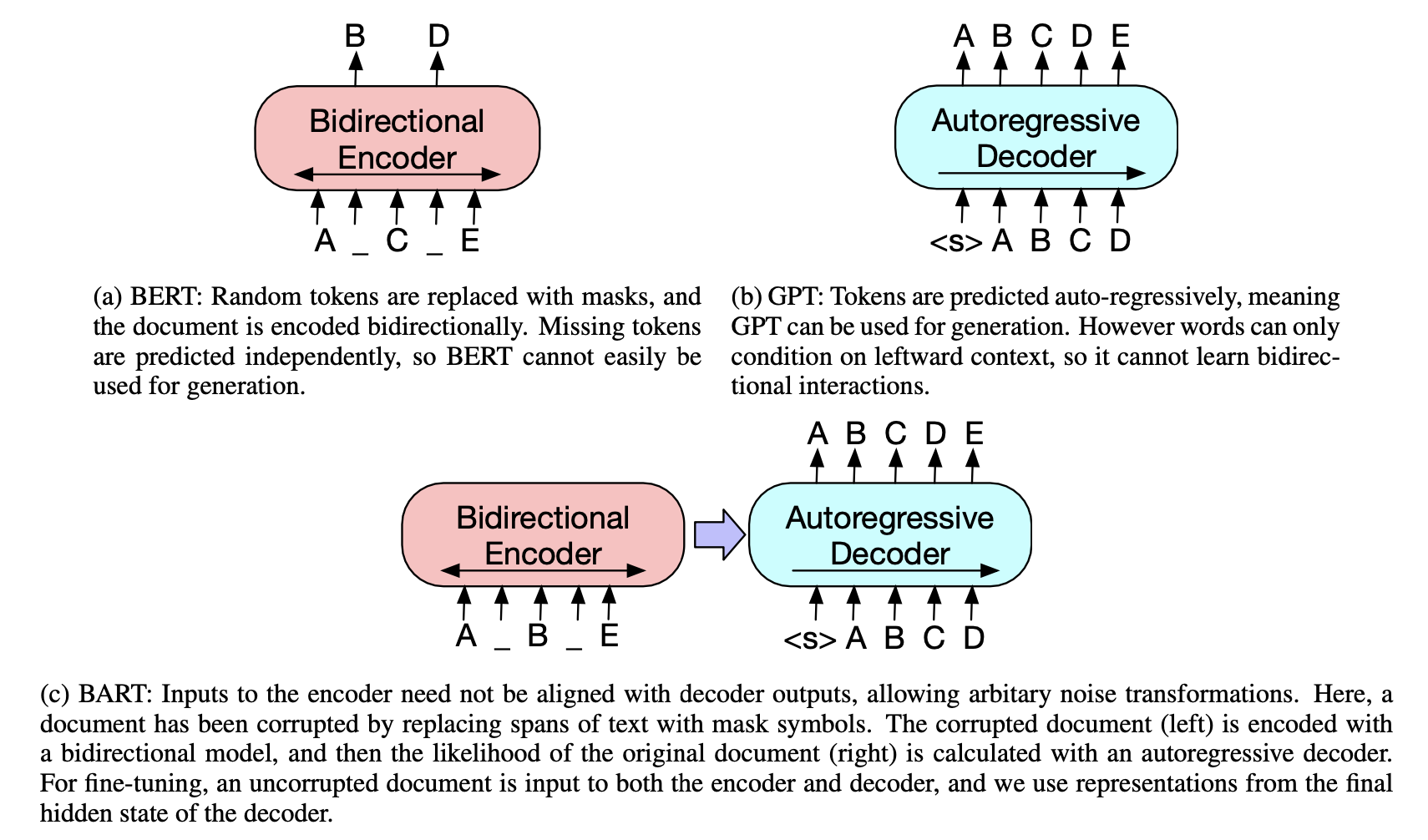

BART is a sequence-to-sequence transformer model that combines both Bidirectional and Auto-Regressive Transformers. It is a denoising autoencoder, pre-trained to reconstruct corrupted input sequences. This architecture makes it highly versatile and suitable for a wide variety of downstream tasks.

BART is trained by:

- (1) Corrupting text with an arbitrary noising function

- (2) Learning a model to reconstruct the original text

BART can be seen as generalizing BERT (due to the bidirectional encoder), GPT (with the left-to-right decoder), and many other more recent pretraining schemes. BART combines elements of both BERT and GPT. Like BERT, it has a bidirectional encoder that uses context from both sides of a word. Unlike BERT, BART uses an autoregressive decoder similar to GPT, where the current word depends on the previous one, enabling it to generate natural language. Additionally, BART employs multiple noising transformations, similar to BERT’s random masked words, to intentionally make data challenging, forcing the model to learn how to generate coherent text under difficult conditions.

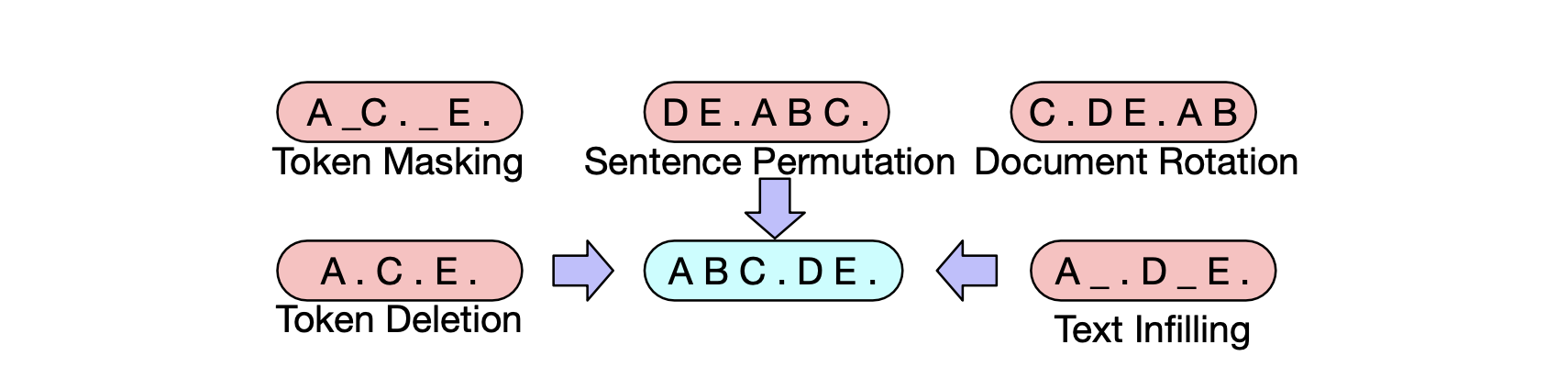

BART is pre-trained by corrupting documents and optimizing a reconstruction loss, where the model learns to recover the original document from its corrupted version. Unlike traditional denoising autoencoders, BART can handle any type of corruption, and when the corruption is extreme, it behaves like a language model. We experiment with various corruption strategies, including Token Masking (replacing random tokens with [MASK]), Token Deletion (removing random tokens), Text Infilling (replacing spans with [MASK] tokens), Sentence Permutation (shuffling sentence order), and Document rotation (shifting the document to start at a random token). These transformations, summarized below with examples in Figure 2, offer a foundation for further exploration and development of new corruption techniques.

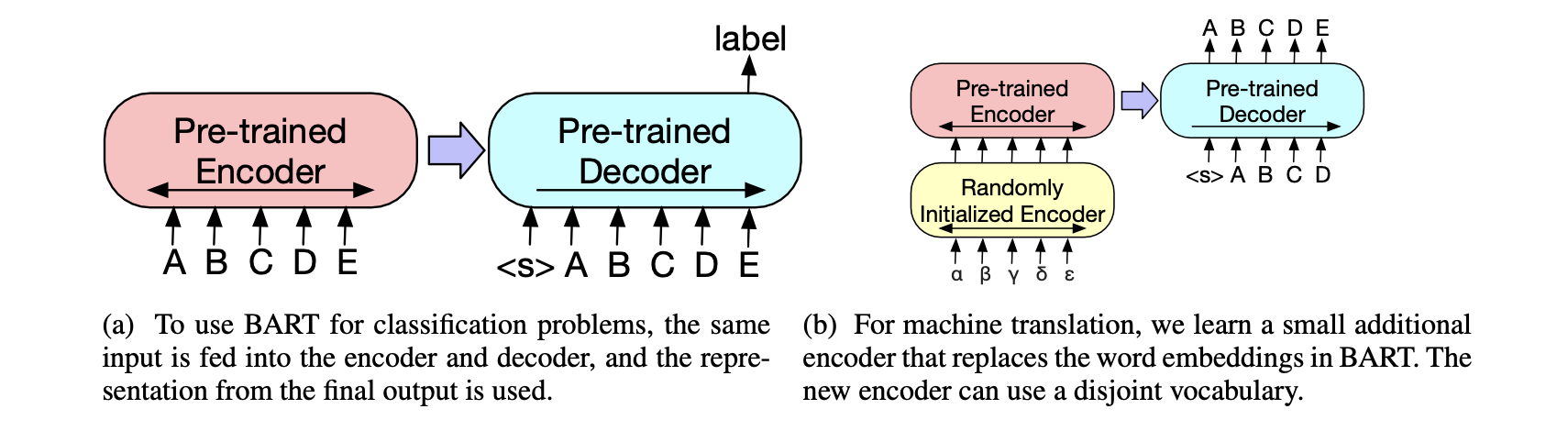

The representations produced by BART can be used in several ways for downstream applications. To fine-tune BART for classification, the same input is fed into both the pre-trained encoder and decoder, with the representation from the final output of the decoder used to predict the label. For machine translation, a small additional encoder is learned to replace the word embeddings in the original BART model. This new encoder uses a disjoint vocabulary, allowing for effective translation between languages.

- Link to paper: https://arxiv.org/abs/1910.13461

DPR

Dense Passage Retrieval (DPR) is a set of tools and models for state-of-the-art open-domain Q&A research. Given a collection of \(M\) text passages, the goal of DPR is to index all the passages in a low-dimensional and continuous space, such that it can retrieve efficiently the top-k passages relevant to the input question for the reader at run-time. Note that \(M\) can be very large and \(k\) is usually small, such as 20 to 100.

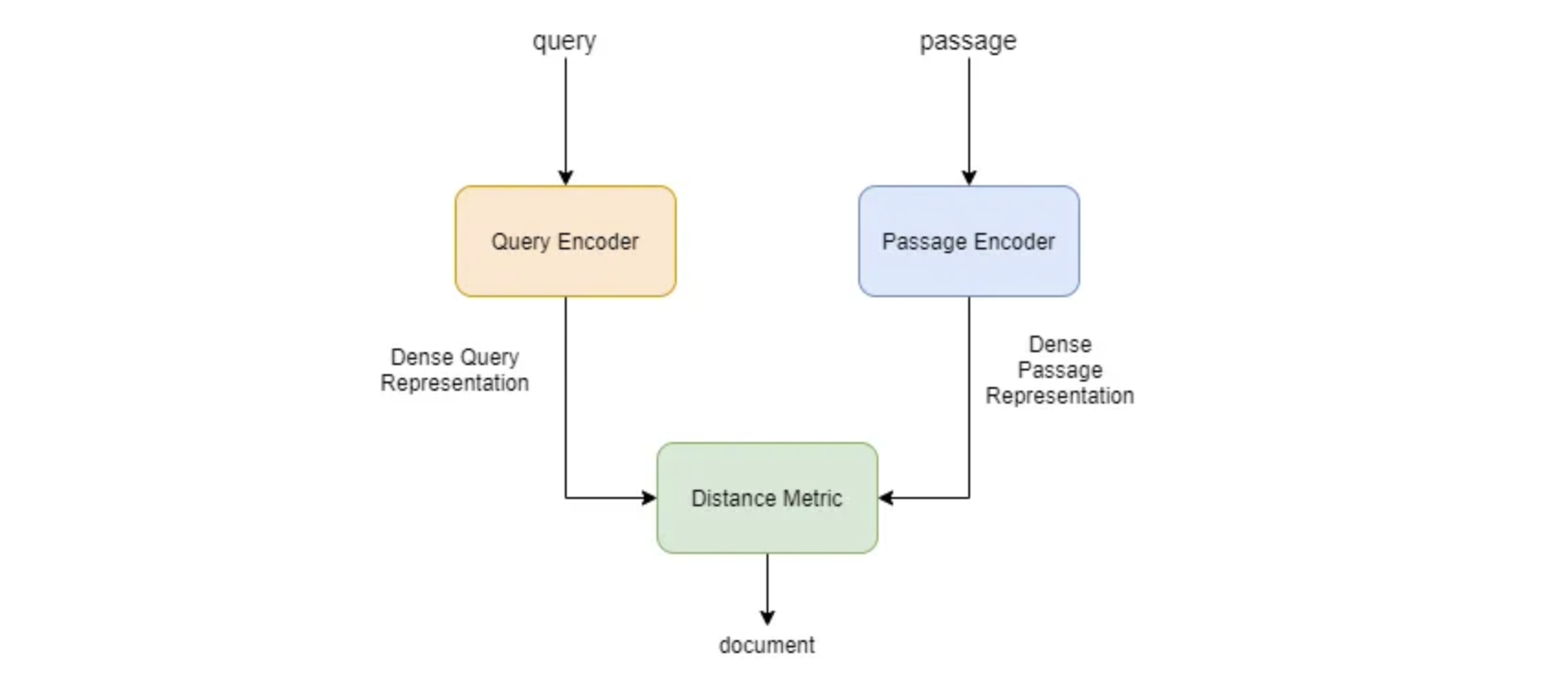

Two independent BERT (base) are used. The representation at the [CLS] token is taken as the output. DPR uses dense encoders to map text passages and input questions to high-dimensional vectors. During retrieval, it applies a different encoder to map the input question to a vector, then finds the closest passages by calculating the similarity between the question and passages using the dot product of their vectors.

\[\operatorname{sim}(q, p)=E_{Q}(q)^{\top} E_{P}(p)\]The similarity function needs to be decomposable for efficient computation, with many functions, like cosine and Mahalanobis distance, being transformations of Euclidean distance (L2). DPR chooses the inner product function due to its efficiency and the potential for improving retrieval performance by learning better encoders.

- Link to paper: https://arxiv.org/abs/2004.04906

- Link to Github: https://github.com/facebookresearch/DPR

Retriever

Let’s go back to the RAG model. The retriever component \(p_{\eta}(z \mid x)\) is based on DPR. A pre-trained bi-encoder from DPR is used to initialize the retriever and to build the document index. This retriever was trained to retrieve documents which contain answers to TriviaQA questions and Natural Questions. The document index is refered as the non-parametric memory.

Generator

The generator component \(p_{\theta}(y_i \mid x,z,y_{1:i-1})\) is based on BART (large). It was pre-trained using a denoising objective and a variety of different noising functions. And It has obtained state-of-the-art results on a diverse set of generation tasks and outperforms comparably-sized T5 models. The BART generator parameters is referred as the parametric memory henceforth.